A few months ago, we introduced the first preview releases of the AI and Vector Data extensions—powerful .NET libraries designed to simplify working with AI models and vector stores.

Since then, we’ve been collaborating closely with partners and the community to refine these libraries, stabilize the APIs, and incorporate valuable feedback.

Today, we’re excited to announce that these extensions are now generally available, providing developers with a robust foundation to build scalable, maintainable, and interoperable AI-powered applications.

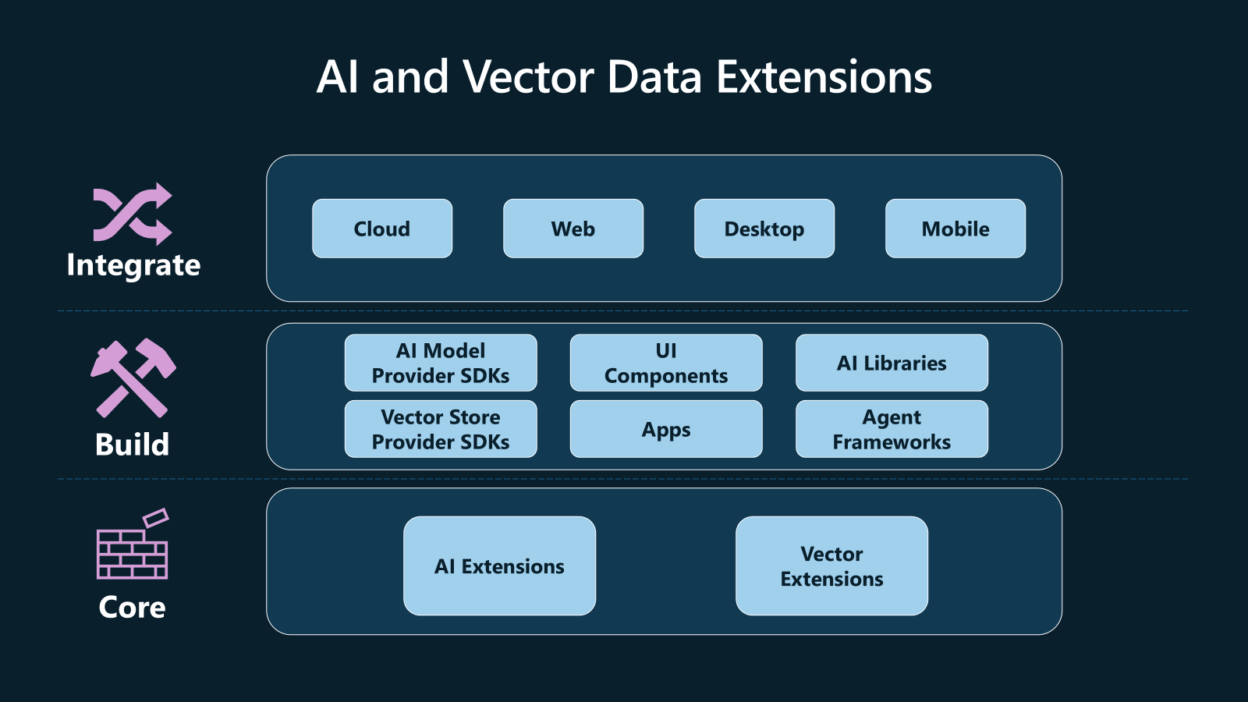

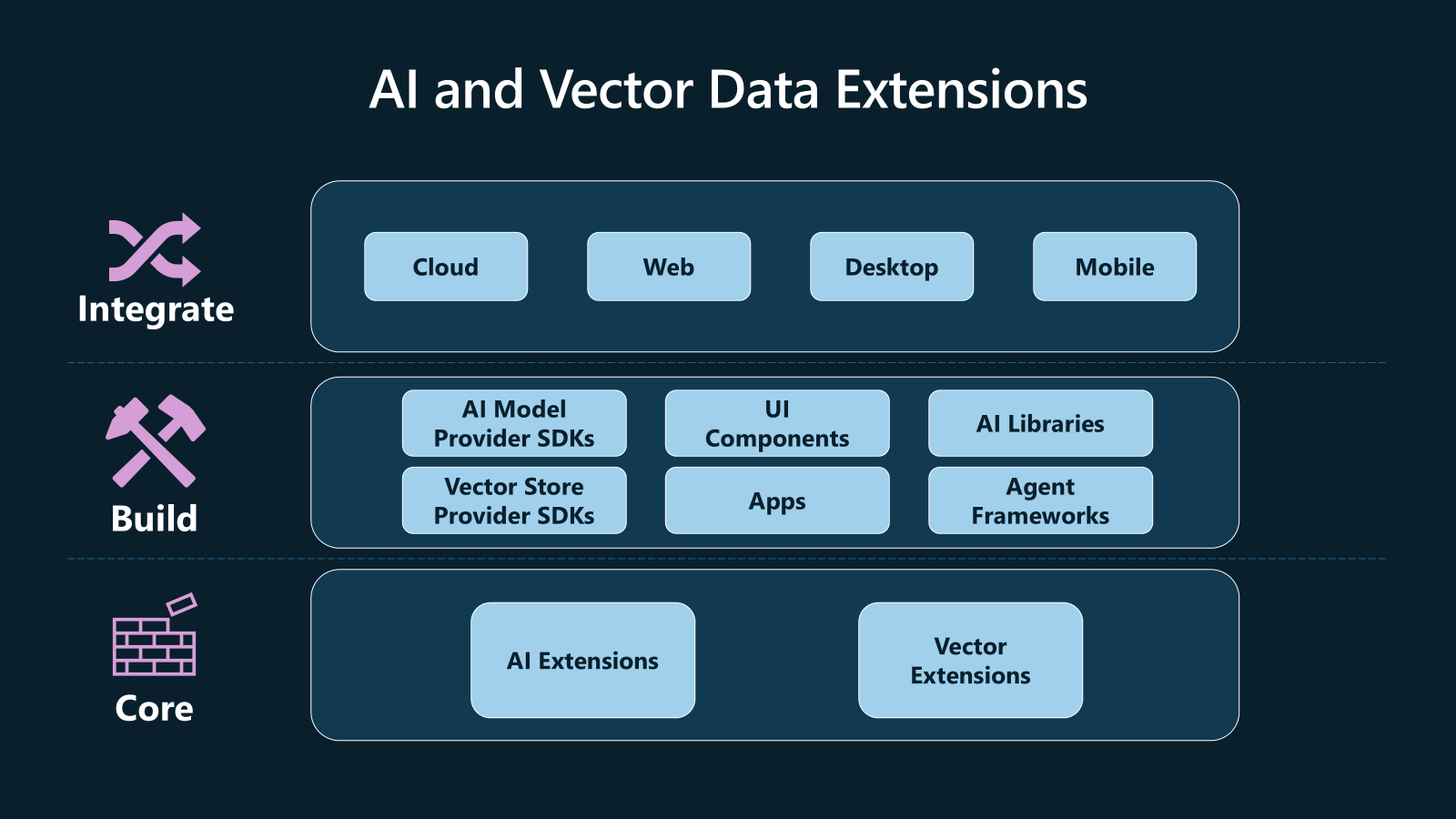

What are the AI and Vector Data Extensions

The AI and Vector Data Extensions are a set of .NET libraries that offer shared abstractions and utilities for working with AI models and vector stores.

These libraries are available as NuGet packages:

- Microsoft.Extensions.AI.Abstractions – Defines common types and abstractions for AI models.

- Microsoft.Extensions.AI – AI extension utilities

- Microsoft.Extensions.VectorData.Abstractions – Provide exchange types and abstractions for vector stores.

These packages serve as foundational building blocks for higher-level components, promoting:

- Interoperability – Libraries can work together more easily by targeting the same abstractions.

- Extensibility – Developers can build on top of shared types to add new capabilities.

- Consistency – A unified programming model across different implementations reduces integration complexity.

Why target these abstractions?

If you’re building a library, it’s critical to remain agnostic to specific AI or vector systems. By depending only on these shared abstractions, you avoid locking your consumers into particular providers and ensure your library can interoperate with others. This promotes flexibility and broad compatibility across the ecosystem.

If you’re building an application, you have more freedom to choose concrete implementations.

What does this mean in practice?

- Providers can implement these abstractions to integrate smoothly with the ecosystem.

- Library authors should build on the abstractions to enable composability and avoid forcing provider choices on consumers.

- Application developers benefit from a consistent API, making it easier to switch or combine providers without major code changes.

Key Scenarios and Use Cases

The AI and Vector Data Extensions provide the essential building blocks that make it easier to implement advanced AI capabilities in your applications. By offering consistent abstractions for features like structured output, tool invocation, and observability, these libraries enable you to build robust, maintainable, and production-ready solutions tailored to your specific needs.

Below are examples of common scenarios where these building blocks come together to empower real-world AI-powered applications.

Portability across model and vector store providers

Whether you’re using different model providers for local development and production, or building agents that rely on various models, the AI and Vector Data extensions offer a consistent set of APIs across environments.

Thanks to a growing ecosystem of official and community-supported packages that implement these abstractions, it’s easy to integrate models and vector databases into your applications.

Below is an example of how you can switch between providers based on the environment, while keeping your code clean and consistent:

IChatClient chatClient =

environment == "Development"

? new OllamaApiClient("YOUR-OLLAMA-ENDPOINT", "qwen3")

: new AzureOpenAIClient("YOUR-AZURE-OPENAI-ENDPOINT", new DefaultAzureCredential())

.GetChatClient("gpt-4.1")

.AsIChatClient();

await foreach (var message in chatClient.GetStreamingResponseAsync("What is AI?"))

{

Console.Write($"{message.Text}");

};

IEmbeddingGenerator<string, Embedding<float>> embeddingGenerator =

environment == "Development"

? new OllamaApiClient("YOUR-OLLAMA-ENDPOINT", "all-minilm")

: new AzureOpenAIClient("YOUR-AZURE-OPENAI-ENDPOINT", new DefaultAzureCredential())

.GetEmbeddingClient("text-embedding-3-small")

.AsIEmbeddingGenerator();

var embedding = await embeddingGenerator.GenerateAsync("What is AI?");

VectorStoreCollection<int, Product> collection =

environment == "Development"

? new SqliteCollection<int, Product>(

"Data Source=products.db",

"products",

new SqliteCollectionOptions { EmbeddingGenerator = embeddingGenerator})

: new QdrantCollection<int, Product>(

new QdrantClient("YOUR-HOSTED-ENDPOINT"),

"products",

true,

new QdrantCollectionOptions { EmbeddingGenerator = embeddingGenerator});

await collection.UpsertAsync(...);Progressively add functionality

Using AI models is just the beginning. Building production-grade applications requires logging, caching, and observability through tools like OpenTelemetry.

The AI extensions support these needs out of the box. They integrate seamlessly with existing .NET primitives, allowing you to plug in your own ILogger, IDistributedCache, and OpenTelemetry-compliant tools without reinventing the wheel.

Here’s a simple example of how to enable these features:

IChatClient chatClient =

new ChatClientBuilder(...)

.UseLogging()

.UseDistributedCache()

.UseOpenTelemetry()

.Build();Need more control? The extensions are fully extensible. You can inject custom logic—like rate limiting—directly into the pipeline.

RateLimiter rateLimiter = new ConcurrencyLimiter(new()

{

PermitLimit = 1,

QueueLimit = int.MaxValue

});

IChatClient client =

new ChatClientBuilder(...)

.UseDistributedCache()

.Use(async (messages, options, nextAsync, cancellationToken) =>

{

using var lease = await rateLimiter.AcquireAsync(permitCount: 1, cancellationToken).ConfigureAwait(false);

if (!lease.IsAcquired)

throw new InvalidOperationException("Unable to acquire lease.");

await nextAsync(messages, options, cancellationToken);

})

.UseOpenTelemetry()

.Build();Handle different content and structure their output

Generative AI models can process more than just text—they’re also capable of handling images, audio, and other data types.

To support this, the AI extensions provide flexible primitives for representing diverse data formats.

One common challenge is that model outputs are often unstructured, making integration with your application more difficult.

Fortunately, many models now support structured output—a feature where responses are formatted according to a predefined schema, such as JSON. This adds reliability and predictability to the model’s responses, simplifying integration.

The AI extensions are designed to work seamlessly with structured outputs, making it easy to map model responses directly to your C# types.

record Item(string Name, float Price);

enum Category { Food, Electronics, Clothing, Services };

record Receipt(string Merchant, List<Item> Items, float Total, Category Category);

var imageUri = new Uri("https://host/someimage.jpg");

List<AIContent> content = [

new TextContent("Process this receipt"),

new UriContent(new Uri(imageUri), mediaType: "image/jpeg")

];

var message = new ChatMessage(ChatRole.User, content);

var response = await chatClient.GetResponseAsync<Receipt>(message);

response.TryGetResult(out var receiptData);

Console.WriteLine($"Merchant: {receiptData.Merchant} | Total: {receiptData.Total} | Category: {receiptData.Category}");

//Merchant: ECOSPACE | Total: 49.64 | Category: FoodTool Calling

AI models can process data and understand natural language, but they can’t perform actions on their own. To take meaningful action, they need access to external tools and systems.

This is where tool calling comes in—a feature supported by many modern generative AI models that allows them to invoke functions based on user intent.

Similar to structured output, the AI extensions make it easy to take advantage of this capability in your applications.

In this example, the CalculateTax method is registered as an AI-invokable function. The model can automatically decide when to call it based on the user’s request. If you need more control over this behavior, it can be easily configured.

record ReceiptTotal(float SubTotal, float TaxAmount, float TaxRate, float Total);

[Description("Calculate tax given a receipt and tax rate")]

float CalculateTax(Receipt receipt, float taxRate)

{

return receipt.Total * (1 + taxRate);

}

IChatClient functionChatClient =

chatClient

.AsBuilder()

.UseFunctionInvocation()

.Build();

var message = new ChatMessage(ChatRole.User, [

new TextContent("Here is information from a recent purchase"),

new TextContent($"{JsonSerializer.Serialize(receiptData)}"),

new TextContent("What is the total price after tax given a tax rate of 10%?")

]);

var response = await functionChatClient.GetResponseAsync<ReceiptTotal>(message, new ChatOptions {Tools = [AIFunctionFactory.Create(CalculateTax)]});

response.TryGetResult(out var receiptTotal);

Console.WriteLine($"SubTotal: {receiptTotal.SubTotal} | TaxAmount: {receiptTotal.TaxAmount} | TaxRate: {receiptTotal.TaxRate} | Total: {receiptTotal.Total}");

// SubTotal: 49.64 | TaxAmount: 4.96 | TaxRate: 0.1 | Total: 54.6Simplified embedding generation

Semantic search—and by extension, Retrieval-Augmented Generation (RAG)—is a powerful pattern for building intelligent applications. While the core tasks, like embedding generation, are conceptually straightforward, they often involve repetitive, boilerplate code.

At the heart of this pattern are vector databases, which store numerical representations of data (called embeddings) and enable fast similarity search. They let applications retrieve semantically relevant information—not just exact keyword matches—making them essential for tasks like search, question answering, and recommendations.

The AI and Vector Data extensions are designed to streamline this process by working seamlessly together.

When you combine a vector store with an embedding generator, the extensions make it easy to map your existing C# data models directly to the vector store. These abstractions eliminate much of the boilerplate, so you can focus on your application logic—not infrastructure details.

And because these are just abstractions, they’re inherently modular. You can swap out vector store or embedding providers without changing your application logic—making it easy to adapt to new tools or scale as your needs evolve.

In the example below, we provide plain text. The extensions handle the rest—automatically generating the embedding using the configured generator and storing it in the appropriate vector field, all with minimal code.

// Using Microsoft.SemanticKernel.Connectors.SqliteVec package

EmbeddingGenerator<string, Embedding<float>> embeddingGenerator = ...;

VectorStoreCollection<int, Product> collection = new SqliteCollection<int,Product>("Data Source=products.db", "products", new SqliteCollectionOptions {

EmbeddingGenerator = embeddingGenerator,

});

await collection.UpsertAsync(new Product

{

Id = 1,

Name = "Kettle",

TenantId = 5,

Embedding = "This kettle is great for making tea, it heats up quickly and has a large capacity."

});

record Product

{

[VectorStoreKey]

public int Id { get; set; }

[VectorStoreData]

public required string Name { get; set; }

[VectorStoreData]

public int TenantId { get; set; }

[VectorStoreVector(Dimensions: 1536)]

public string? Embedding { get; set; }

}Powerful search capabilities

Depending on your scenario and data model, you may need more advanced search capabilities. The Vector Data abstractions support a range of powerful search features—including multiple similarity metrics, vector search, hybrid search, and filtering.

Just like with embedding generation, the querying process is streamlined. You can pass in plain text, and the abstractions handle generating the embedding, applying the appropriate similarity metric, and retrieving the most relevant results.

Filtering is also built-in and designed to feel familiar. The filter expressions use a syntax similar to LINQ predicates, so you can leverage your existing C# skills without needing to learn a new query language.

In the example below, we’re searching for products that match a natural language query, filtered by tenant:

EmbeddingGenerator<string, Embedding<float>> embeddingGenerator = ...;

VectorStoreCollection<int, Product> collection = new SqliteCollection<int,Product>("Data Source=products.db", "products", new SqliteCollectionOptions {

EmbeddingGenerator = embeddingGenerator,

});

var query = "Find me kettles that can hold a lot of water";

await foreach (var result in collection.SearchAsync(query, top: 5, new() { Filter = r => r.TenantId == 8 }))

{

yield return result.Record;

}Plugs right into your existing dependency injection configurations

Modern .NET applications rely on dependency injection (DI) to manage configuration and lifetimes of services—and the AI and Vector Data extensions are built to align with that model.

Whether you’re wiring up components for local development or configuring services for production, the extensions register cleanly into your existing DI container. This means you can compose and configure AI components just like any other part of your application.

builder.Services.AddChatClient(sp => {...})

.UseLogging()

.UseCaching()

.UseOpenTelemetry();

builder.Services.AddEmbeddingGenerator(sp => {...})

.UseLogging()

.UseCaching()

.UseOpenTelemetry();

// SQLite implementation of Vector Data

builder.Services.AddSqliteCollection<int, Product>("Products", "Data Source=/tmp/products.db");A growing ecosystem

Adoption of these extensions has been strong and continues to grow across the ecosystem.

In just a few months, the extensions have surpassed 3 million downloads, with nearly 100 public NuGet packages combined taking a dependency on them.

We’re seeing adoption across a wide range of official and community projects, including:

- Libraries: Model Context Protocol (MCP), AI Evaluations, Pieces

- Agent Frameworks: Semantic Kernel, AutoGen

- Playgrounds: AI Dev Gallery

- Provider SDKs: Azure OpenAI, OllamaSharp, Anthropic, Google, HuggingFace, Sqlite, Qdrant, CosmosDB, AzureSQL

- UI Components: DevExpress, Syncfusion, Progress Telerik

Below, we highlight a few of these integrations in more detail.

Model Context Protocol (MCP) C# SDK

MCP is an open standard that acts as a universal adapter for AI models. It enables models to interact with external data sources, tools, and APIs through a consistent, standardized interface. This simplifies integration by allowing models to invoke functions or access data without needing custom code for each service.

We partnered with Anthropic to deliver an official MCP C# SDK. Built on top of shared AI abstractions like AIContent, AIFunction, and others, the SDK enables MCP clients and servers to easily define tools and invoke them using IChatClient implementations.

var mcpClient = await McpClientFactory.CreateAsync(clientTransport, mcpClientOptions, loggerFactory);

var tools = await mcpClient.ListToolsAsync();

var response = await _chatClient.GetResponseAsync<List<TripOption>>(messages, new ChatOptions { Tools = [.. tools ] });Evaluations

Evaluations play a crucial role in building trustworthy AI applications by helping ensure safety, reliability, and alignment with intended behavior. They allow developers to systematically test and validate AI models against real-world scenarios and quality standards.

The .NET AI Evaluation set of libraries builds on top of the AI Extenions to create powerful evaluation tools that integrate seamlessly into your development workflow, enabling continuous monitoring and improvement of your AI systems.

To dive deeper into these evaluation capabilities and see practical examples, check out the following blog posts.

- Unlock new possibilities for AI Evaluations for .NET

- Evaluating content safety in your .NET AI applications

Progress Telerik

Since the launch of ChatGPT, chat has become the primary way users interact with language models.

To offer a similar experience in their own applications, developers have often had to build custom chat UI components from scratch.

Telerik has made this much easier by providing a set of ready-to-use chat UI components for Blazor. These components simplify the process of adding conversational interfaces to web apps.

Built on top of the AI Extensions, Telerik’s solution also allows developers to switch between different model providers with minimal code changes—making it both flexible and future-proof.

Semantic Kernel

Semantic Kernel offers high-level components that make it easier to integrate AI into your applications.

We’re entering the agentic era—where AI agents need access to models, data, and tools to perform tasks effectively. With Semantic Kernel, you can build agents using the same AI extension primitives you’re already familiar with, such as IChatClient.

Here’s an example of how to use IChatClient as the foundation for an agent in Semantic Kernel’s Agent Framework:

var builder = Kernel.CreateBuilder();

// Add your IChatClient

builder.Services.AddChatClient((sp) =>

{

return new ChatClientBuilder(...)

.UseLogging(...)

.UseFunctionInvocation()

.Build();

});

builder.Plugins.AddFromFunctions(

nameof(CalculateTax),

[AIFunctionFactory.Create(CalculateTax).AsKernelFunction()]);

var kernel = builder.Build();

var agent = new ChatCompletionAgent

{

Name = "TravelAgent",

Description = "A travel agent that helps users with travel plans",

Instructions = "Help users come up with a travel itinerary",

Kernel = kernel,

Arguments = new KernelArguments(

new PromptExecutionSettings {

FunctionChoiceBehavior = FunctionChoiceBehavior.Auto()})

};This is just a basic example. Check out the following post for a deeper look at how Semantic Kernel builds on top of the AI Extensions.

Semantic Kernel also offers a unified set of connectors for vector databases, built on top of the Vector Data Extensions. These connectors simplify integration by providing a consistent programming model.

For more details, check out this post on the how Semantic Kernel builds their connectors using the Vector Data Extensions.

AI Dev Gallery

The AI Dev Gallery is a Windows application that serves as a comprehensive playground for AI development with .NET. It offers everything you need to explore, experiment with, and implement AI features in your applications—entirely offline, with no dependency on cloud services.

The AI Dev Gallery is built on top of the AI and Vector Data Extensions, providing a solid foundation for model and data integrations. It also leverages:

- Microsoft.ML.Tokenizers for efficient text preprocessing and tokenization.

- System.Numerics.Tensors for high-performance processing of model outputs.

Together, these components make the AI Dev Gallery a powerful tool for local, end-to-end AI experimentation and development.

To learn more about the AI Dev Gallery, see the following blog post.

Get Started today

Try out the .NET AI Templates to get started with the AI and Vector Data extensions.

Make sure to check out the documentation to learn more.

We can’t wait to see what you build.

The post AI and Vector Data Extensions are now Generally Available (GA) appeared first on .NET Blog.